ChatGPT and other generative AI technologies are wowing — and sometimes alarming — users with their human-like abilities to carry on conversations and perform tasks.

But they’re also sparking concern over the amount of energy needed to create and operate the systems. A researcher at the University of Washington estimated the power required to make the ChatGPT app that was publicly released in November, determining that it consumed roughly 10 gigawatt-hours (GWh) of power to train the large language model behind it. That’s on par with the annual energy use of roughly 1,000 U.S. homes.

The number is approximate and other researchers have calculated much less energy use for creating GPT-3 and similar-sized models. But GPT-3 was only the start. GPT-4, which was trained on much more data, and other new models gobble even more power.

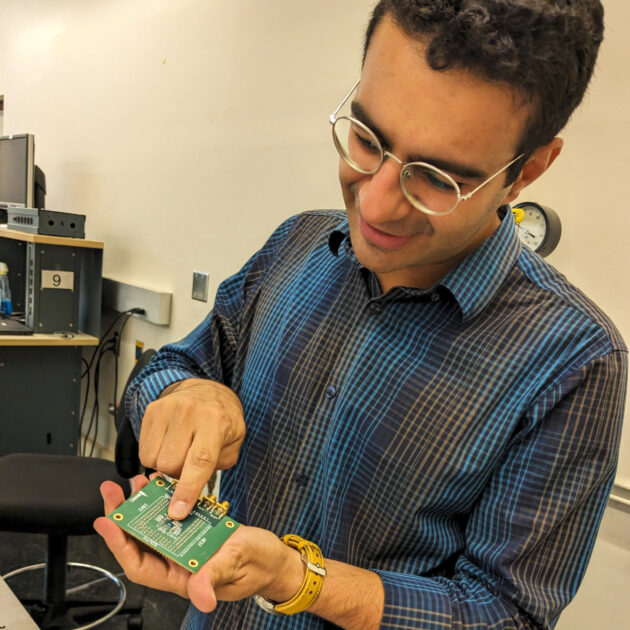

“If this is going to be the beginning of a revolution and everyone wants to develop their own model, and everyone is going to start using them — there’s a million different applications — then this number is going to get bigger and bigger to the level that it’s not going to be sustainable,” said Sajjad Moazeni, UW assistant professor of electrical and computer engineering.

Moazeni and other engineers want to address that challenge. They’re working on solutions to make the technology that creates and operates these generative AI models more energy efficient.

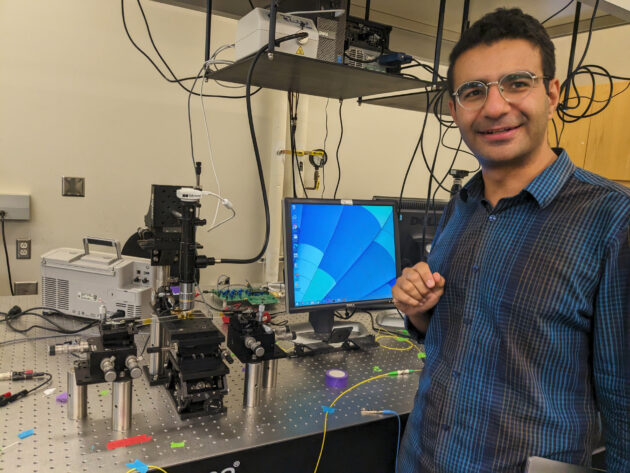

In the basement of the UW’s Paul G. Allen Center for Computer Science & Engineering, Moazeni has a lab where he and fellow researchers are manipulating and modifying ultra-high tech computer chips with unimaginably small transistors.

These chips are at the core of GPT and other large language models and their applications. They function as the brain of computer networks that consume and process data. The chips talk to each other and pass information via electrical and optical signals, acting as the metaphorical neurons of the system.

Moazeni wants to improve that signaling by incorporating photonics that will allow chip-to-chip communications through optical signals sent via optical fibers, instead of less-efficient, lower-performing electrical signals currently in use.

Large language models use special, energy-hungry chips called graphic processing units, or GPUs. The GPUs and related electronics are an essential part of computer servers that are housed in data centers. The data centers are where the “cloud” resides.

The processors consume energy to operate and produce heat, requiring cooling systems using fans or water to keep the machines from overheating. It all adds up to significant power use.

Giant tech companies offering cloud services — such as Amazon Web Services, Microsoft’s Azure and Alphabet’s Google Cloud — own many of the massive data centers where generative AI tools are trained and operate. The companies have all pledged to cut their greenhouse gas emissions to zero or less and are pursuing renewable energy sources to reach those targets.

But the rise in generative AI will keep boosting demand for data centers. It’s something the companies are taking into consideration as they work toward carbon goals. Amazon, for example, expects to run all of its operations on renewables by 2025.

“We’re not worried or having concerns about hitting our [renewable energy] goal,” said Charley Daitch, AWS’s director of energy and water strategy, in a recent GeekWire interview.

“I see a lot of opportunities. We need the creativity. We need new ideas.”

– Sajjad Moazeni, UW assistant professor of electrical and computer engineering

“But looking at longer term — like late 2020s, 2030 — with the opportunity and potential for generative AI, it’s absolutely critical that we find new ways to bring on renewables and even larger scale,” Daitch said.

Before people started worrying about the energy use of large language models, alarms were being sounded over crypto mining and Bitcoin farms. To mint cryptocurrency coins, miners also rely on power-consuming computer farms. In the U.S., crypto mining operations use enough energy to power millions of homes, according to a recent analysis by the New York Times.

Large language models gulp energy not just to train the systems, but also when people query the models and in their maintenance. The juice needed for ChatGPT alone could be 1 GWh of energy each day, Moazeni estimated.

That means figuring out ways to make the computer networks function smarter and more efficiently to keep the virtual conversations going.

“I see a lot of opportunities,” Moazeni said. “We need the creativity. We need new ideas. We need new architectures [for computer networks]. As a researcher, I get very excited about that.”